|

| You can use Aurasma to do Augmented Reality body hacking! This was a happy accident that happened very recently, and is something we will most definitely be investigating further in the future! |

The artist and cricital engineer Julian Oliver (who will again feature in Brighton Digital festival this year) has made some very interesting things with AR in the past, including this game :

And this fantastic project, called "the Artvertiser"

(a computer encased in some retro looking goggles, which replace billboards with Art)

We absolutely love the message of this project, and it's playful, subversive approach

Augmented Billboards 2: The Artvertiser @ Transmediale 2010 from Julian Oliver on Vimeo.

Augmented Billboards 2: The Artvertiser @ Transmediale 2010 from Julian Oliver on Vimeo.

In Aurasma, you can map 2D images, 3D models, and also sound to a trigger image. The app calls these mappings "Auras" Once you have made an "Aura", you can add it to your channel. You can find and follow other people's channels, which lets you see any Auras that they have made, and can likewise share yours.

There are some example trigger images up on our Aurasma facebook album for you to see it for yourself, or try poking around our website, there may be a few surprises lying around....

It is relatively quick and easy to link 2D content to trigger images, but I figured it was worth looking into how to get your own custom 3D content in there. I had been researching 3D scanning a bit, and had a rudimentary understanding of 3D modelling software, so this was the perfect excuse to learn.

|

| You got all that yeah? |

This tutorial gives a good overview of how to get a textured 3D model from Maya into Aurasma, and was very helpful, as it requires a very specific set, and format of files to work properly.

This tutorial is great, but it starts with an already made 3D model complete with textures. I wanted to also learn how to create, model, and texture things from scratch.

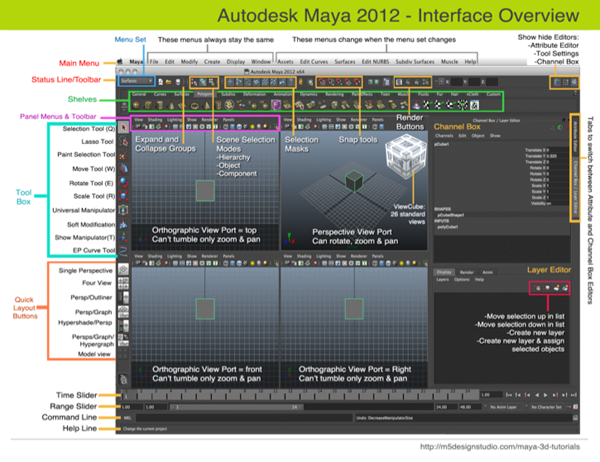

There are a ton of different Maya tutorials out there at lots of different levels, so I spent a while watching them and soaking up what on earth all the different bits of the interface are for, and how people use it for different tasks before diving in. Once you get your head round the basics, and understand what is possible, with a few common shortcuts, it is an incredibly powerful, quick and easy to use bit of software.

In order to share what I learned during this period of research I created my first ever video tutorial. Starting completely from scratch I go through how to :

- Download and install a trial version of Maya

- Create and edit 3D models

- Add textures and map them to the faces of the models.

- Use keyframes to add animation to the model (and "bake" these animations so Aurasma can read them)

- Export all the files needed for Aurasma

- Use Aurasma's dashboard to assign your model to a trigger image, and overlay it as an "Aura"

Tutorial video :

Here is a video demonstrating some of the 2D, 3D, and animated Auras we made :

And here are some screenshots of them from Aurasma :

.PNG) |

| This robot arm is animated (as you can see in video above) |

.PNG) |

| Some kind of boimechanical bird / robot arm thing. This was a result of my experiments to try and create jointed models and rigging then for animation. |

.PNG) |

| David Allistone produced this animated overlay cleverly to bring the LED matrix to life. It also has music, which makes it more fun, and is something we will be investigating further in the future |

.PNG) |

| Experimenting with minimal, abstract wireframe picture frames for the website |

|

| Slightly more polished looking picture frames |

.PNG) |

| This abstract floating smurf model is definitely my favourite. |

The 3D scanned smurf model above, and the animated LED matrix were the two most compelling AR experiences we created, and we will definitely be developing and researching these avenues further over the coming months.

Unfortunately, cleaning up the 3D smurf model into a suitable format was not at all easy, and took a whole lot of trial and error to get it to work nicely. (see 3D scanning and modelling blog post) Refining this workflow is a priority task for us in the future.

Once we have a decent library of 3D toy models, we would like to create a small book with images on each page that we can use as a portable exhibition. The book would be filled with bespoke trigger images which would stand on it's their own as a pieces of art, and with the models overlayed would also be a great way to showcase creations from previous workshops (plus it would save us lugging a whole load of extra toys around!)

Unfortunately, as with a lot of our technical research, we are only just getting to the exciting bits now, as it has taken a while to become aware of, and start learning how to use the right tools for the job. In July and August though, we started to integrate these into some activities and workshops.

In July we did a series of events with Home Live Art (which incidentally came about through conversation and collaboration with mASCot at our new R&D hub in Prestamex house) We used these events as a testing ground for taking content created by children, and mapping it to images which could be printed out and attached to cardboard cretions in a Robot Relays workshop.

There are blog post on our events blog about these here (Hopscotch festival) and here (Shuffle festival)

Here are some images from the day :

.png)

.png)

.png)

.png)

.png)